RESEARCH

Earth Systems Lab 2024

ESL is demonstrating the potential of machine learning for both scientific process and discovery. It is facilitating the development of new paradigms of engineering from understanding disasters in real-time to AI in orbit.

Artificial Intelligence is enabling a bold new era of these capabilities and FDL Europe’s goal is to explore new science and apply these new techniques in a safe, ethical and reproducible way to the biggest challenges in space science and exploration - for the good of all humankind.

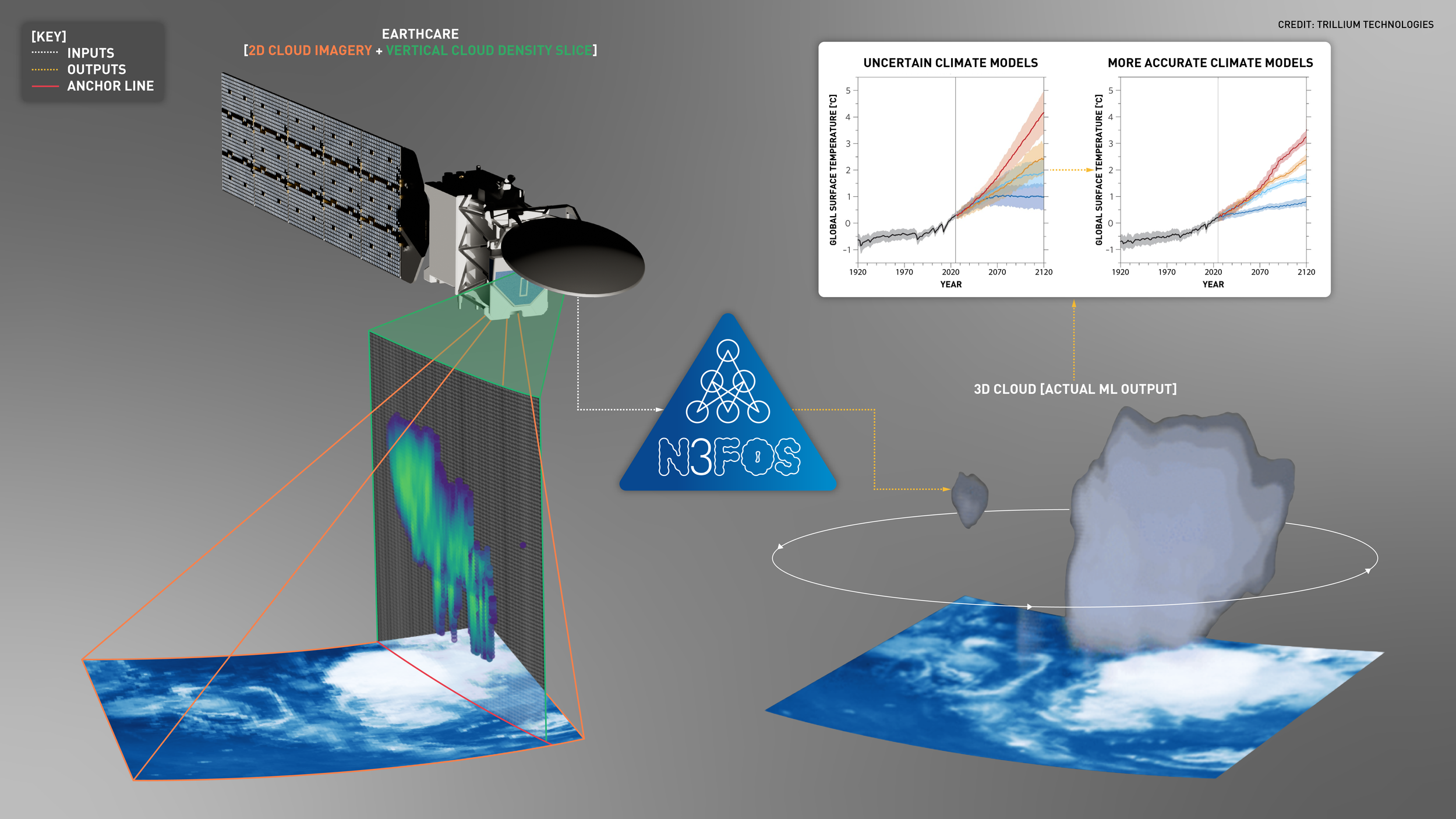

3D CLOUDS USING MULTI-SENSORS

ESA’s Earthcare mission was successfully launched on May 28th 2024 and will use LIDAR, radar, and multi-band cameras to assemble a 3D snapshot of the atmosphere. 3D information of our atmosphere is not only useful for improving climate models, but also important for understanding Earth’s radiation balance, since the altitude and vertical distribution of clouds strongly influence their radiative properties and consequently Earth’s climate. Earthcare will acquire data continuously along a narrow strip of the Earth, measuring aerosols and atmospheric composition with unprecedented accuracy. However, this strip represents only a small fraction of the atmosphere, compared to the 2D imagery acquired every day by satellites in geostationary and low Earth orbit. We aim to use machine learning to reconstruct the 3D structure of the atmosphere from multiple 2D datasets to deliver a robust model of our atmosphere on planetary scales and long timescales.

Can we perform instrument-to-instrument translation to derive 3D information from 2D measurements of the atmosphere?

Can we create flexible models that are ‘input sensor agnostic’ and translate different 2D sensors to 3D profiles?

Can we create flexible models that predict well outside the timeframe of the ground truth data?

Can we create, and validate, global 3D cloud profiles?

-

-

Multi-Sensor Predictions of 3D Cloud Profiles using Machine Learning - AGU 2024

-

-

Results

This research was accepted into the Machine Learning and the Physical Sciences Workshop and NeurIPS 2024. You can read the publication here.

The team used Self-Supervised Learning (SSL) via Masked Autoencoders (MAEs) to pre-train models using unlabelled MSG/SEVIRI images. The models were then fine-tuned for 3D cloud reconstruction using aligned pairs of MSG/SEVIRI and CloudSat/CPR radar reflectivity profiles.

A SatMAE model was adapted to encode the date, time, and location of the input data. Compared to a U-Net baseline, the models showed improved performance across RMSE, PSNR, and perceptual quality when predicting radar reflectivity. The SatMAE model achieved the lowest errors in the tropical convection belt, demonstrating its superior generalization.

In addition to predicting 3D radar reflectivity, the project also showed strong performance for 3D cloud type classification, helping further the goal of characterizing cloud properties and narrowing climate model uncertainties.

The upcoming ESA VIGIL mission to Lagrange-5 will provide an unprecedented view of the Sun before it rotates towards the Earth. This year, the FDL Europe heliophysics team will focus on developing an early warning system for predicting solar eruptions based on a combination of data from the VIGIL, ESA Solar Orbiter, Proba-2, NASA Stereo, and NASA ACE satellites. VIGIL will be viewed as the first asset in a multi-viewpoint observation system feeding into a robust AI workflow to predict solar weather, distributed between the ground and space.

Can we combine diverse data on the Sun (both remote-sensing & in-situ) to reveal new correlations between data that lead to new scientific discoveries?

Can we discover which few of X possible instruments will provide the best forecast?

Can we use streaming images of the Sun and in-situ measurements of the solar environment to forecast coronal mass ejections (CMEs) a few days in advance?

The opportunity here is to understand in detail how multi-modal data (imagery and in-situ time-series measurements of the extended solar environment) can be used to understand processes on our Sun, and to make skillful predictions about space weather on long time horizons. The outcomes will likely inform the design of future ESA missions, including VIGIL-2.

Early warning for Solar Eruptions with VIGIL

-

Early warning for Solar Eruptions with VIGIL 2.0 - ESWW 2024

-

-

Results

The team focused their efforts on predicting solar flares and CMEs, resulting in a framework called HelioWatch. HelioWatch is a flexible, scalable, and modular approach to improve the prediction of solar flares and coronal mass ejections (CMEs). Solar forecasting is challenging and the team were able to both match the state-of-the-art while paving the way for easy integration of new mission data in the future.

The framework includes comprehensive model benchmarking and facilitates the exploration of correlations across datasets, which could enhance our understanding of solar activity and drive the continuous improvement of forecasting models. Models were tested on the multi-modal SDO-ML dataset, spanning 8 years from 2010-2018.

3D SAR for Forest Biomass

ESA’s new BIOMASS mission will launch in 2025 a satellite to measure forest biomass, height and disturbance on a planetary scale. It will carry for the first time ever in space a P-band (435 MHz) SAR instrument delivering 1-year of tomographic (3D) data and 4-years of interferometric change maps at 200-m spatial resolution. The challenge this year is to develop new machine learning workflows that rapidly produce accurate forest biomass, forest height and tree species maps from tomographic 3D SAR measurements. The FDL Europe team will take advantage of the TomoSense airborne SAR survey as a proxy for BIOMASS, combined with complementary LIDAR and hyperspectral data.

Can we use machine learning to rapidly estimate above-ground biomass,forest height and tree species information directly from calibrated SAR tomographic 3D data?

Can we use machine learning to rapidly produce 3D tomographic cubes of global forests from uncalibrated SAR acquisitions.?

TomoSense was an airborne campaign providing tomographic SAR data at two wavelengths together with airborne and terrestrial LIDAR and ground-based forest surveys. The opportunity is to understand the insight that the BIOMASS mission will be able to provide when enhanced by state-of-the-art machine learning workflows and how machine learning can be used to optimise complex 3D processing workflows.

-

-

Tree Species Classification using Machine Learning and 3D Tomographic SAR- a case study in Northern Europe - AGU 2024

-

-

Results

The team developed models to predict canopy height and tree species using data from TomoSense. This is a precursor mission to BIOMASS, captured using airborne sensors provided richly labelled data from a test region in Germany.

Using a combination of P-band and L-band imagery, the team were able to accurately reconstruct tree height when compared to gold-standard LIDAR data (with a mean average error of approximately 5 m when using multiple input images). Interestingly, smaller Convolutional Neural Networks (CNNs) were effective, suggesting that the problem is data-limited, though this should be resolved when global BIOMASS data begins to be published, later in 2025.

This challenge also explored species identification, a notoriously difficult task in forest monitoring given the presence of canopy occlusion, class imbalance and confusing targets (such as visually similar trees, or overlapping branches). It was found that integrating location information into model inputs provided a boost in predictive performance, which is possibly advantageous as a result of how similar tree species tend to be naturally clustered. This would also be relevant for predicting in global contexts where there is a great diversity of local biomes.

SAR-FM: A Foundation Model for SAR

ML foundation models are gaining attention in the remote-sensing community because of their potential to be adapted for many analysis tasks and their ability to generalise between locations and times.

This ability is critical for users of synthetic aperture radar (SAR) data because the complex nature of SAR acquisitions means that analytical routines are difficult to transfer between geographies and epochs. A truly general SAR foundation model would represent a profound leap in utility for the community.

This year, the FDL Europe team will expand on the world-leading work from 2023 to investigate best practices for building a scientific SAR Foundation Model:

How should an Earth observation foundation model be used in common downstream scientific tasks? Through what data sampling strategies (tactical sampling) can we better design the sparse labelling strategy that is usually required?

How can we measure if a foundation model is capturing sensible spatial and temporal features across the planet?

How can a foundation model best deliver information from different input modalities (SAR, optical, and more) to downstream tasks?

How can we create flexible foundation models that are robust to missing modalities, unbalanced noise properties, and heterogeneous spatial and time resolution data?

This effort will deliver a new and novel SAR foundation model to complement existing optical/IR foundation models being built by both ESA and NASA. We believe ‘SAR-FM’ will have a wide-reaching and profound impact for the community, unlocking many downstream applications, particularly in areas occluded by clouds, or at night - such as during natural disasters.

-

-

Remote Sensing Segmentation with Foundation Models (on a Budget) - AGU 2024

EnhancingSatellite Data Interpretation through Soft Prompting in Embedding Space with Pre-trained Large Language Model - AGU 2024

-

-

Results

Two papers, accepted into the proceedings of AGU24 and the Foundation Models for Science workshop at NeurIPS 2024. Read more here.

The team explored approaches to enhance the adaptability and efficiency of Earth Observation Foundation Models (EO-FMs) for critical tasks such as flood detection. The integration of Synthetic Aperture Radar (SAR) data, coupled with advancements in machine learning techniques like Low-Rank Adaptation (LoRA), demonstrated significant potential in improving the performance of EO-FMs, particularly when applied to regions with limited labeled data.

This work focused on several challenges when using EO-FMs in practice: ease of fine-tuning on novel downstream tasks, appropriate model selection, and efficient adaptation of these models. Our work provides a solid foundation for the future development of EO-FMs that are not only efficient and adaptable but also capable of performing well in diverse and challenging environments.

The use of parameter-efficient techniques like LoRA will continue to play a critical role in making these models more scalable and widely applicable, particularly for disaster management and environmental monitoring in resource-constrained settings. Going forward, improving the generalization of EO-FMs across varied regions and tasks remains a key challenge that future research must address to fully realize the potential of these models in global Earth observation applications.